PDF Snapshot Testing with Node and GraphicsMagick

In this guide, we'll explore how to generate downloadable PDF files and use snapshot testing to automatically detect any regressions in the code.

After evaluating various methods, we decided to implement a visual snapshot approach similar to Jest's snapshot testing.

We'll be utilizing GraphicsMagick and ImageMagick for this purpose.

Setup

To get started, we need to install GraphicsMagick for our test scripts and ImageMagick for interactive use. GraphicsMagick is well-supported via Node modules, while ImageMagick is user-friendly for command-line operations like generating and viewing comparisons.

On a Mac, you can install these tools and Node modules using:

brew install graphicsmagick brew install imagemagick yarn add gm @types/gm

Installation steps may vary for other platforms.

Code

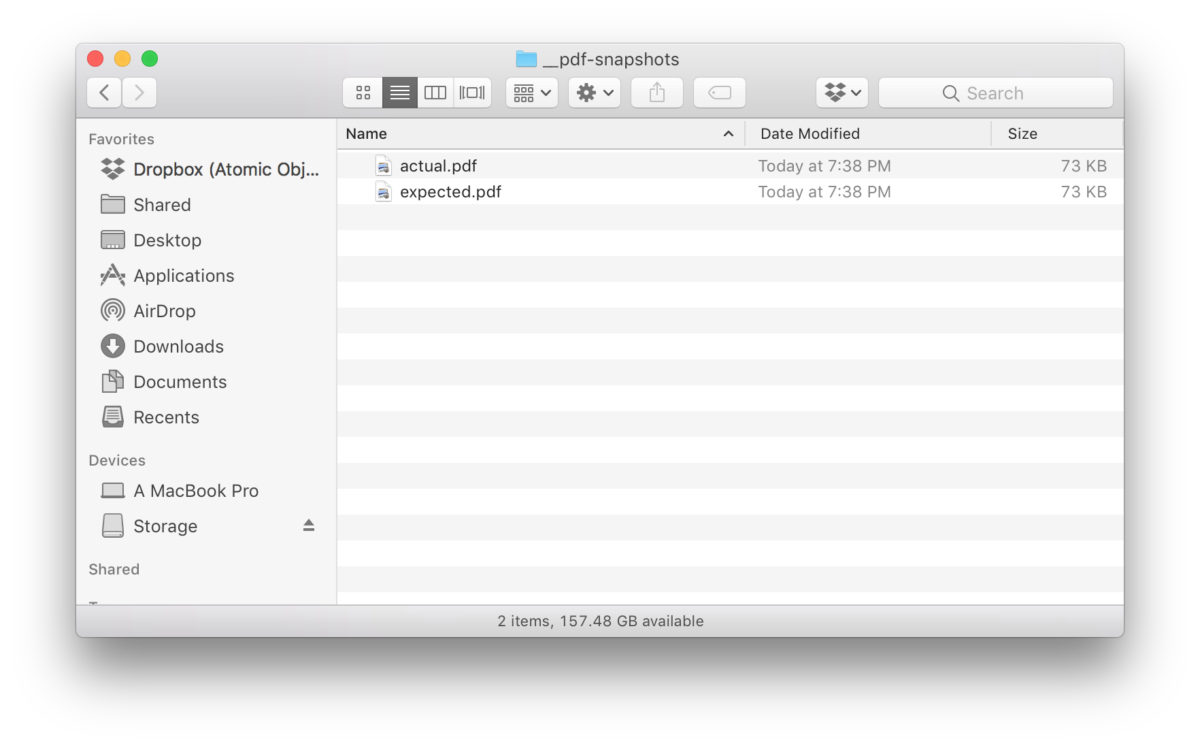

We'll start by defining paths to store our expected and actual PDF files. The

PDFs are stored in a __pdf-snapshots sub-directory, making them

easy to locate near our test code.

const reportPath = path.join(__dirname, '__pdf-snapshots'); const actualFileName = path.join(reportPath, 'actual.pdf'); const expectedFileName = path.join(reportPath, 'expected.pdf');

Next, we define a function to fetch the PDF from our server. The PDF is generated on-the-fly and sent to the client.

const getPdf = async (id: number) => {

const reportUrl = `https://localhost:3100/report/${id}`;

await download(reportUrl, actualFileName);

};

We then create a function to compare a single page of the newly generated

(actual) PDF against the expected PDF. This is done using the

compare function from the GraphicsMagick Node module.

You can specify the page number of the PDF in square brackets

([]). Here, we compare a single-page PDF using page

0 for both files.

We aim for a precise comparison, setting the tolerance level to

0. For more details, refer to the

documentation of the

compare function.

To use await with this function, we wrap it in a promise to

handle the callback style of the GraphicsMagick interface.

const isPdfPageEqual = (a: string, aPage: number, b: string, bPage: number) => {

return new Promise((fulfill, reject) => {

gm.compare(`${a}[${aPage}]`, `${b}[${bPage}]`, { tolerance: 0 }, (err, isEqual, equality) => {

if (err) {

reject(err);

}

fulfill(isEqual);

});

});

};

Next, we define a snapshot function that works as follows:

- If the expected PDF file doesn't exist, it copies the actual PDF to the expected PDF and passes the test.

- If the expected PDF file exists, it compares it with the actual PDF and raises an error if they don't match.

If the PDFs don't match, we can manually inspect them. If satisfied, rerun the

test with the UPDATE environment variable set to overwrite the

expected PDF with the actual PDF and pass the test.

const snapshot = async () => {

if (process.env.UPDATE || !(await exists(expectedFileName))) {

await fs.createReadStream(actualFileName).pipe(fs.createWriteStream(expectedFileName));

} else {

const helpText = [

'Actual contents of PDF did not match expected contents.',

'To see comparison of the expected and actual PDFs, run:',

`compare -metric AE ${expectedFileName} ${actualFileName} /tmp/comparison.pdf; open ${expectedFileName} ${actualFileName} /tmp/comparison.pdf`,

].join('\n\n');

return expect(await isPdfPageEqual(expectedFileName, 0, actualFileName, 0), helpText).to.be.true;

}

};

Finally, we write a simple test to exercise this method.

describe('Report PDFs', () => {

it('can generate a PDF', async () => {

// generate test data

const order = generateTestOrder();

// fetch actual pdf

await getPdf(order.id);

// compare snapshot of actual and expected pdfs

await snapshot();

});

});

Execution

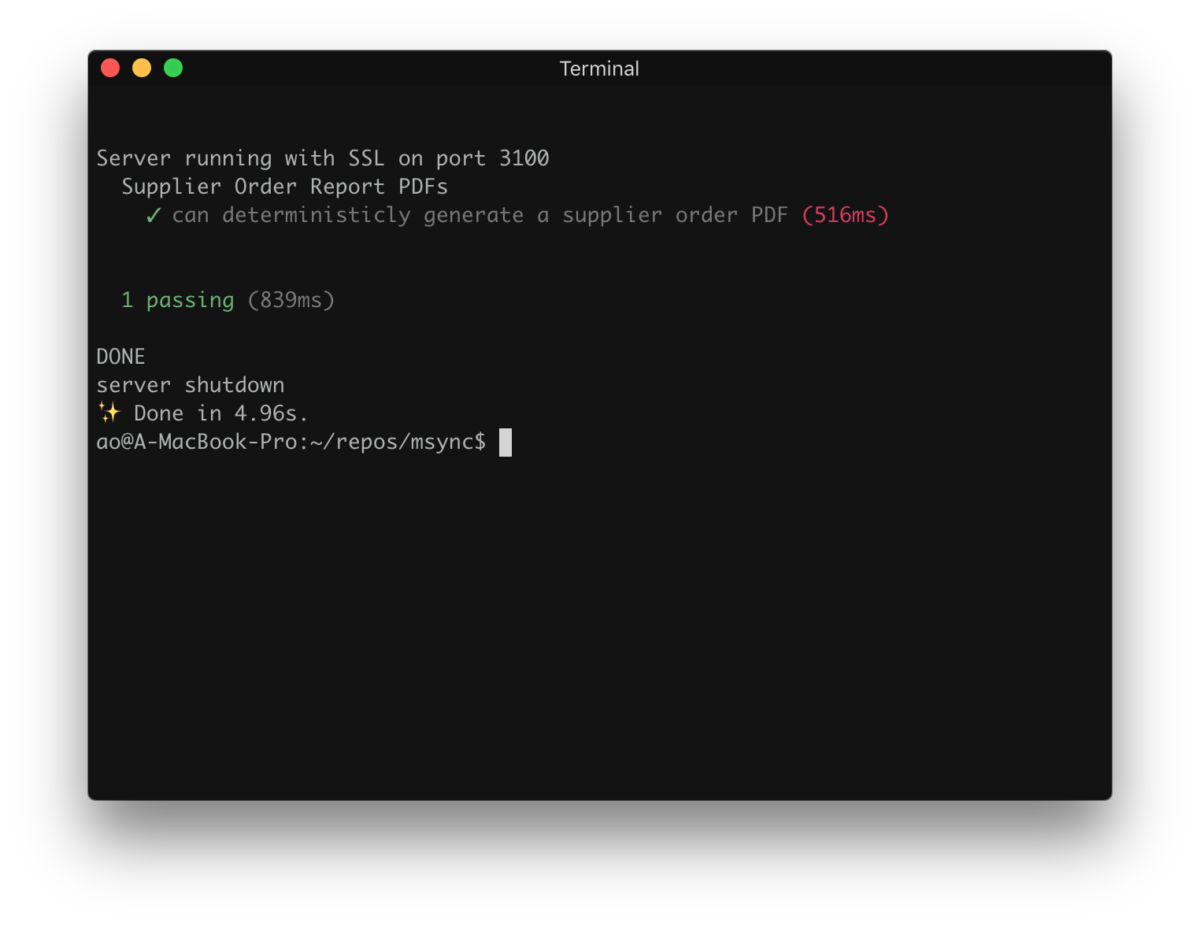

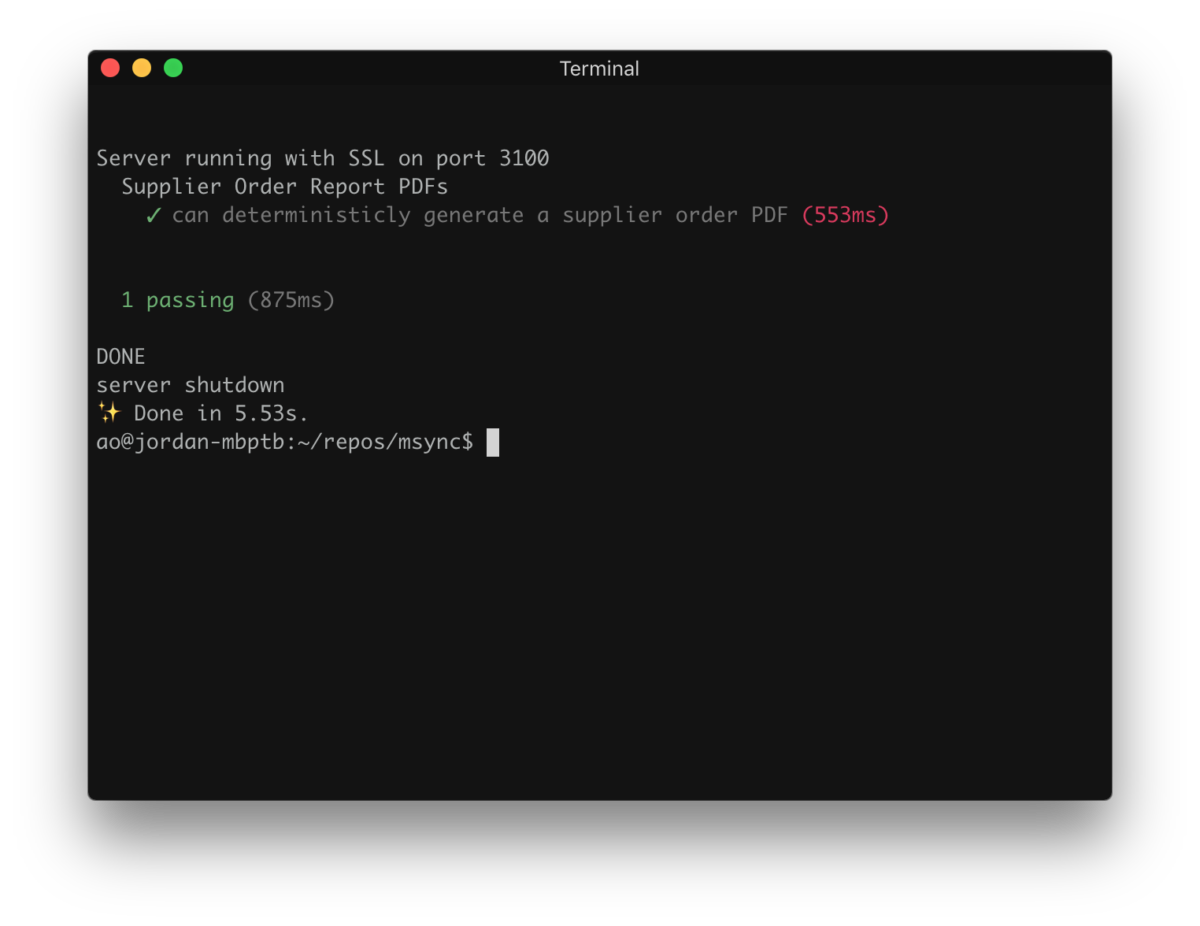

First, run the test to ensure everything is set up correctly.

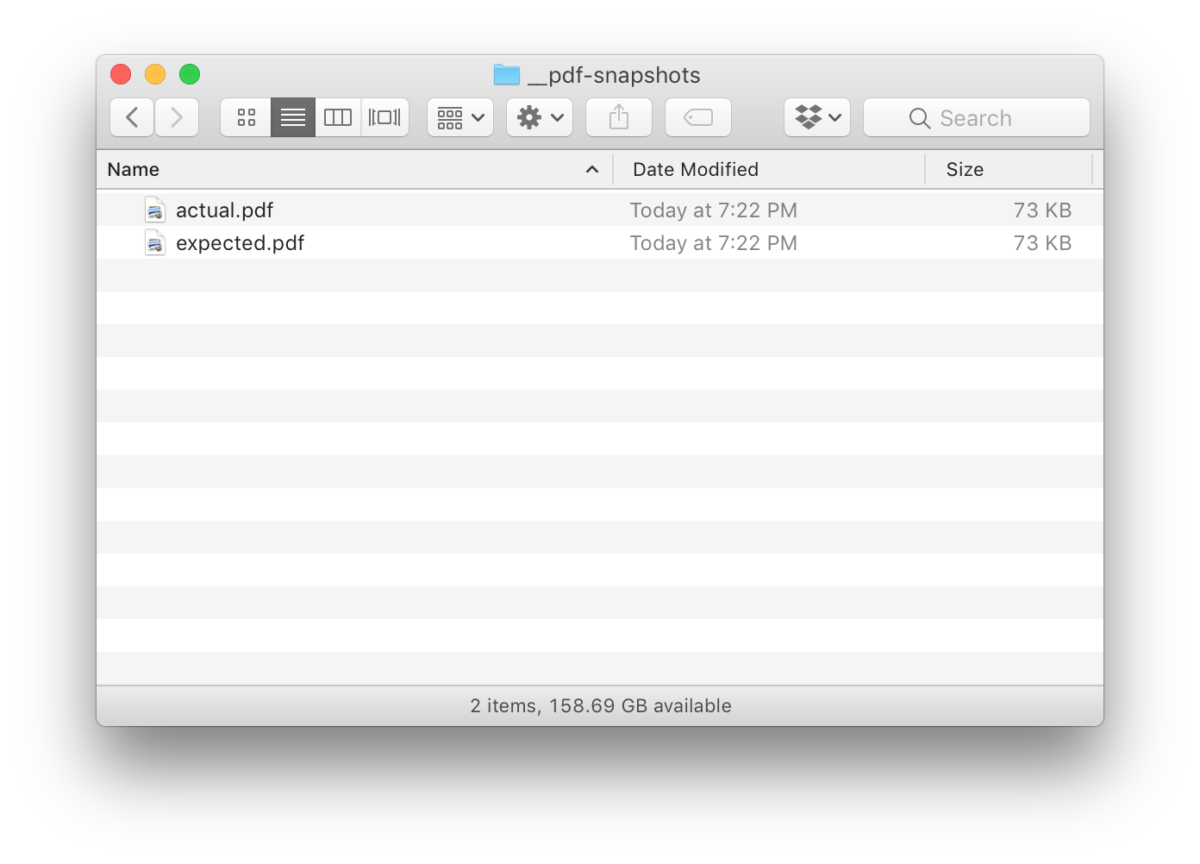

You should see that both the actual and expected PDFs have the same content.

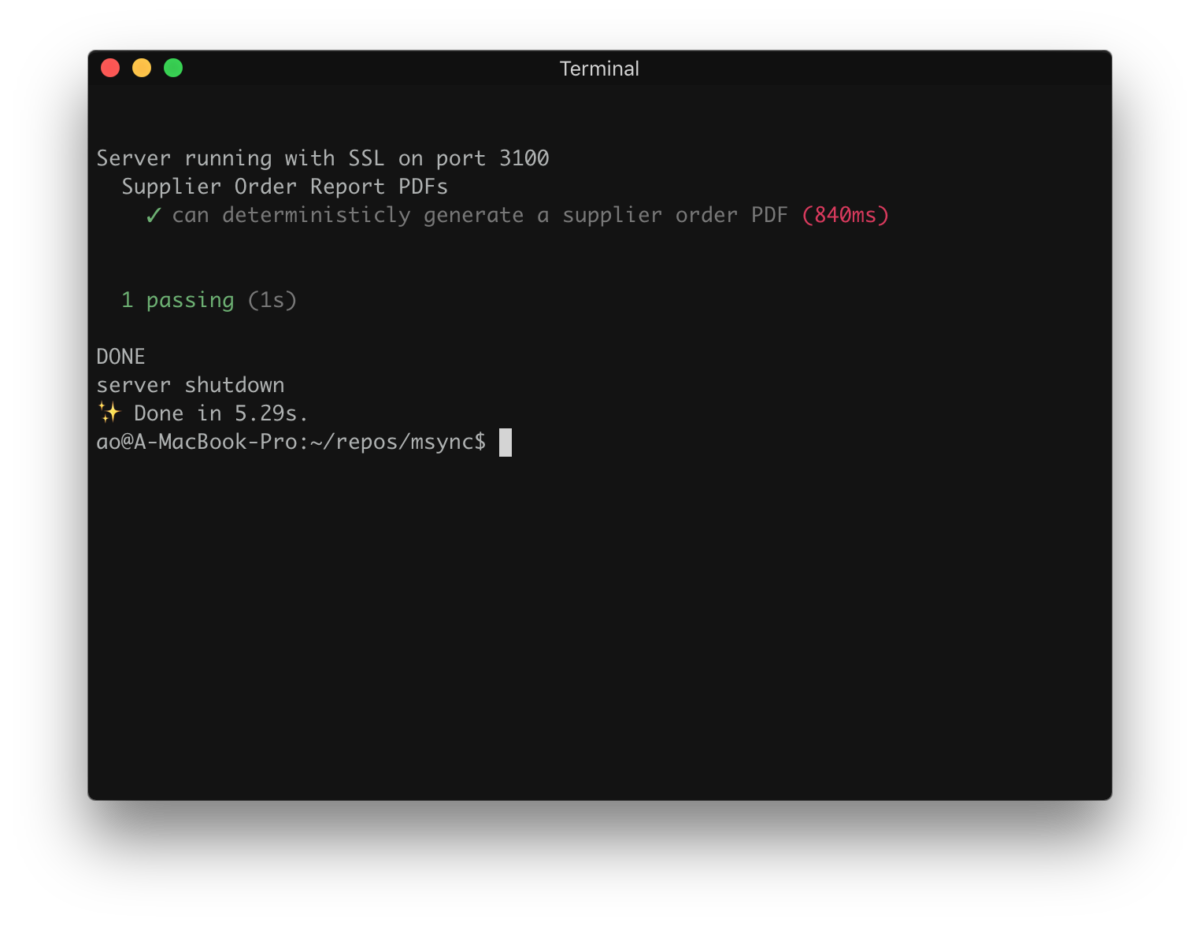

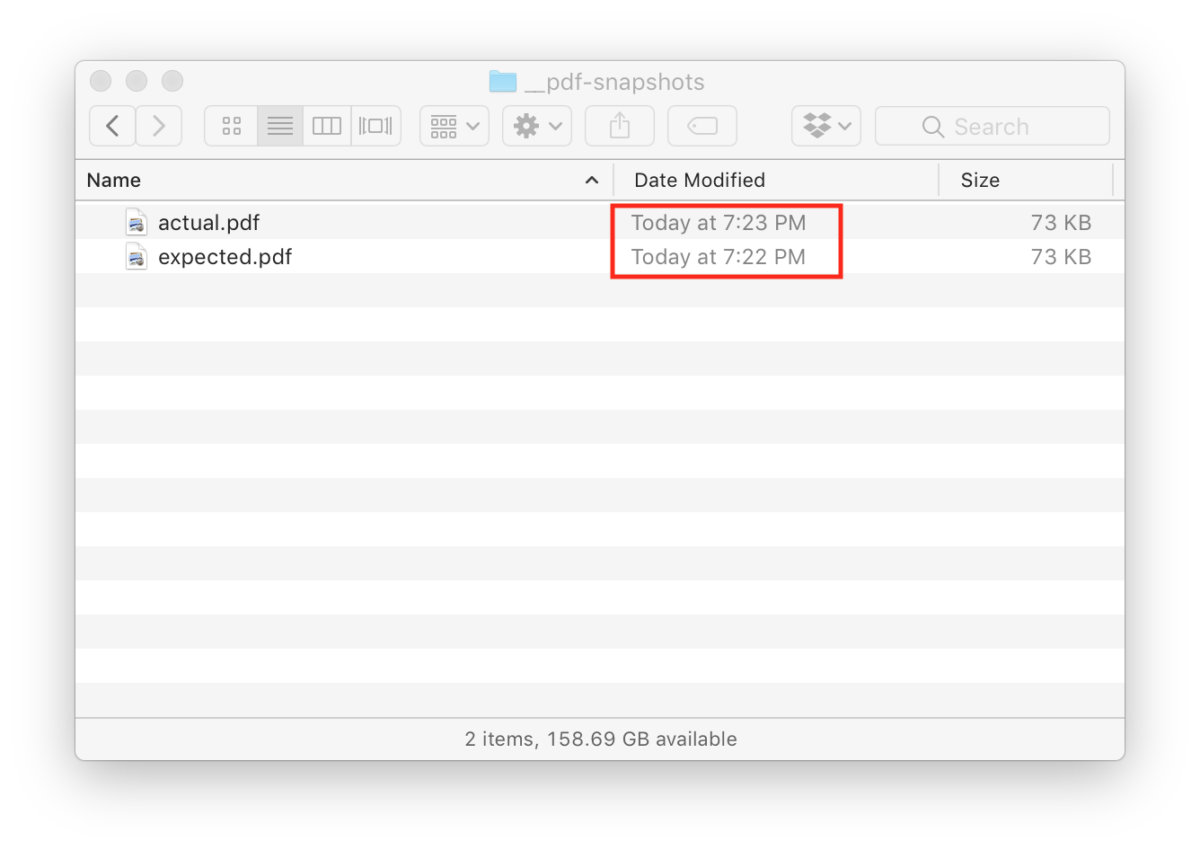

Run the test again to verify that only the actual PDF is updated.

Notice that the timestamp for the actual PDF has changed, but the expected PDF remains the same.

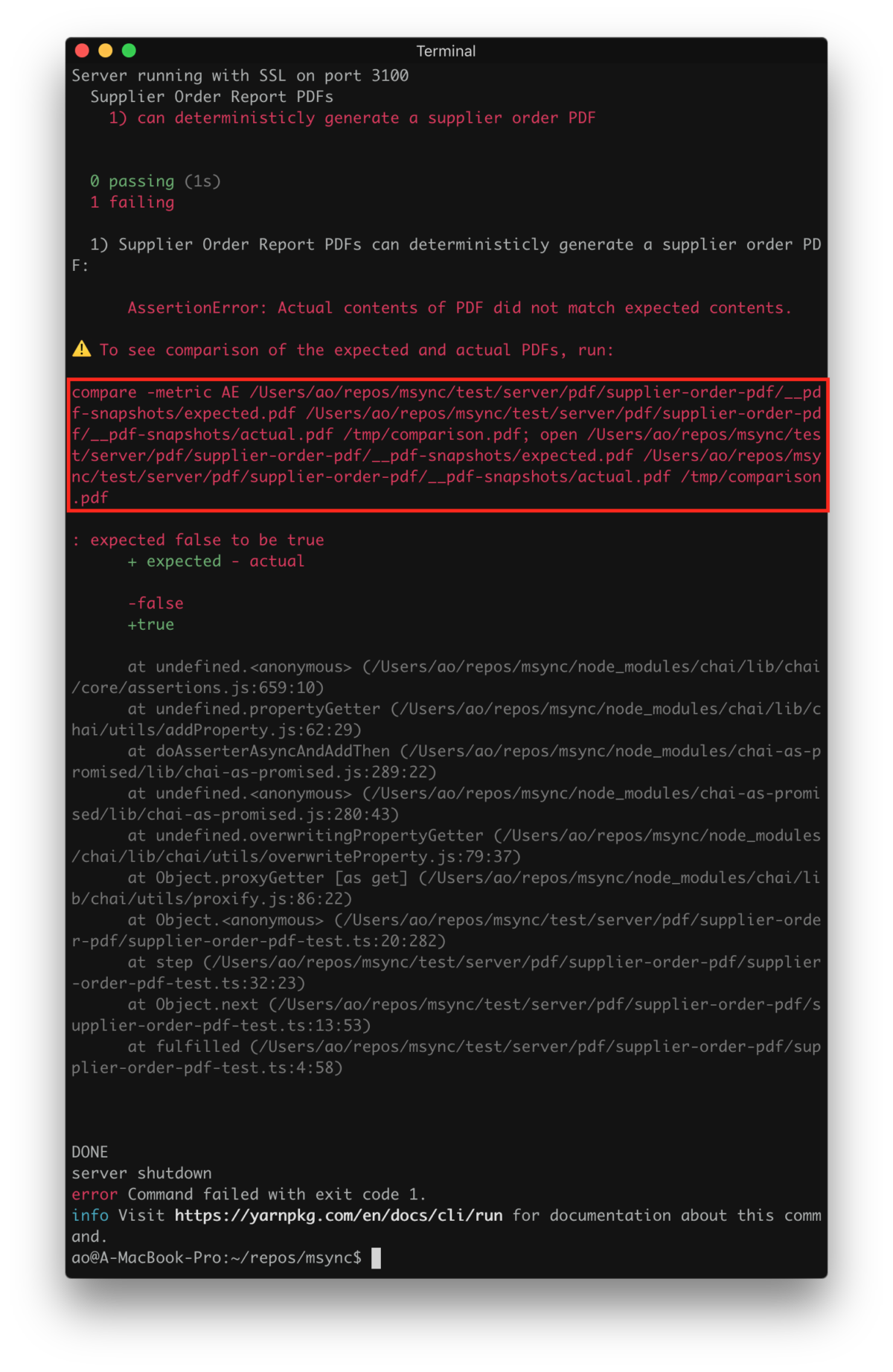

Modify your implementation and re-run the test.

The test should detect any changes between the actual and expected PDFs, reporting them as a test failure.

Manually inspect the expected PDF, actual PDF, and a visual comparison of the two. Execute the command output upon test failure to see the differences.

Observe the differences:

If the changes are acceptable, rerun the test with the UPDATE

environment variable set.

Finally, confirm that the expected PDF's timestamp is updated.

Add the new expected PDF to your repository and commit. In a continuous integration environment, any differences between actual and expected outputs will automatically trigger a test failure.